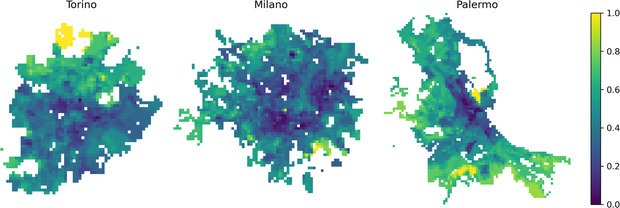

Our recent research on the role of individuals who do not comply with public health safety measures in epidemic context has been published in the Proceedings of the Royal Society A. The study shows how risky behaviours in case of pandemic and epidemic settings can undermine public health interventions. This is particularly relevant in urban … Continue reading A data-driven analysis of the impact of non-compliant individuals on epidemic diffusion in urban settings

Author: Marco Brambilla

A Graph-based RAG for Energy Efficiency Question Answering

In this work, we investigate the use of Large Language Models (LLMs) within a Graph-based Retrieval Augmented Generation (RAG) architecture for Energy Efficiency (EE) Question Answering.First, the system automatically extracts a Knowledge Graph (KG) from guidance and regulatory documents in the energy field. Then, the generated graph is navigated and reasoned upon to provide users … Continue reading A Graph-based RAG for Energy Efficiency Question Answering

M.Sc. Thesis Topics and Proposals at Polimi Data Science Lab – 2023/24

Within the context of our Data Science Lab and research team, we offer a variety of thesis options. Check them out in these slide decks: https://www.slideshare.net/slideshow/embed_code/key/44O3NXu3iERiyS?hostedIn=slideshare&page=upload https://www.slideshare.net/slideshow/embed_code/key/xg4X4dr14ySsWt?hostedIn=slideshare&page=upload

Hierarchical Transformers for User Semantic Similarity.

We discuss the use of hierarchical transformers for user semantic similarity in the context of analyzing users' behavior and profiling social media users. The objectives of the research include finding the best model for computing semantic user similarity, exploring the use of transformer-based models, and evaluating whether the embeddings reflect the desired similarity concept and can be used for other tasks.

Online courses on Data, Policies, and COVID-19

Five online courses (MOOCs) that collect technical and policy solutions to pandemic challenges have been published on Coursera

Exploring the bi-verse: a trip across the digital and physical ecospheres

I've been invited to give a keynote talk at the WISE 2022 Conference. Thinking about it, I decided to focus on my idea of a bi-verse. To me, the bi-verse is the duality between the physical and digital worlds. On one side, the Web and social media are the environments where people post their content, … Continue reading Exploring the bi-verse: a trip across the digital and physical ecospheres

The Role of Human Knowledge in Explainable AI

We published a review article that aims to present a literature overview on collecting and employing human knowledge to improve and evaluate the understandability of machine learning models through human-in-the-loop approaches.

The VaccinEU dataset of COVID-19 Vaccine Conversations on Twitter in French, German, and Italian

Despite the increasing limitations for unvaccinated people, in many European countries, there is still a non-negligible fraction of individuals who refuse to get vaccinated against SARS-CoV-2, undermining governmental efforts to eradicate the virus. Within the PERISCOPE project, we studied the role of online social media in influencing individuals' opinions about getting vaccinated by designing a … Continue reading The VaccinEU dataset of COVID-19 Vaccine Conversations on Twitter in French, German, and Italian

Model Driven Software Engineering in Practice now published by Springer Nature

Starting June 2022, our book "Model Driven Software Engineering in Practice" (co-authored with Jordi Cabot and Manuel Wimmer) is now also available via Springer . This means the price is actually lower, and if you are affiliated with an academic institution, you may even have free access to the book through your institutional access. Check it out here. … Continue reading Model Driven Software Engineering in Practice now published by Springer Nature

EXP-Crowd: Gamified Crowdsourcing for AI Explainability

The spread of AI and black-box machine learning models makes it necessary to explain their behavior. Consequently, the research field of Explainable AI was born. The main objective of an Explainable AI system is to be understood by a human as the final beneficiary of the model. In our research we just published on Frontiers … Continue reading EXP-Crowd: Gamified Crowdsourcing for AI Explainability