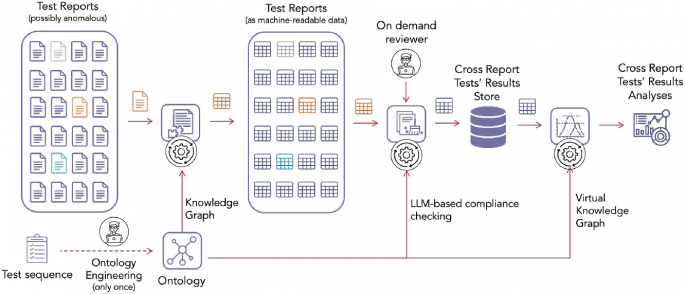

Large manufacturing companies in mission-critical sectors like aerospace, healthcare, and defense, typically design, develop, integrate, verify, and validate products characterized by high complexity and low volume. They carefully document all phases for each product but analyses across products are challenging due to the heterogeneity and unstructured nature of the data in documents. In our research, … Continue reading Integrating Large Language Models and Knowledge Graphs for Extraction and Validation of Textual Data

Category: Machine Learning / AI

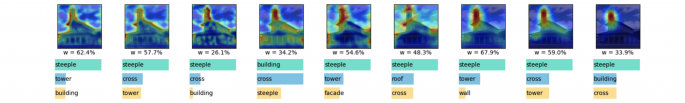

Interpretable Network Visualizations: A Human-in-the-Loop Approach for Post-hoc Explainability of CNN-based Image Classification

Transparency and explainability in image classification are essential for establishing trust in machine learning models and detecting biases and errors. State-of-the-art explainability methods generate saliency maps to show where a specific class is identified, without providing a detailed explanation of the model's decision process. Striving to address such a need, we introduce a post-hoc method … Continue reading Interpretable Network Visualizations: A Human-in-the-Loop Approach for Post-hoc Explainability of CNN-based Image Classification