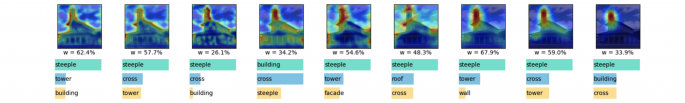

Transparency and explainability in image classification are essential for establishing trust in machine learning models and detecting biases and errors. State-of-the-art explainability methods generate saliency maps to show where a specific class is identified, without providing a detailed explanation of the model's decision process. Striving to address such a need, we introduce a post-hoc method … Continue reading Interpretable Network Visualizations: A Human-in-the-Loop Approach for Post-hoc Explainability of CNN-based Image Classification