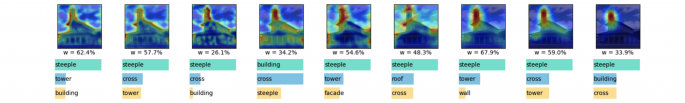

Transparency and explainability in image classification are essential for establishing trust in machine learning models and detecting biases and errors. State-of-the-art explainability methods generate saliency maps to show where a specific class is identified, without providing a detailed explanation of the model's decision process. Striving to address such a need, we introduce a post-hoc method … Continue reading Interpretable Network Visualizations: A Human-in-the-Loop Approach for Post-hoc Explainability of CNN-based Image Classification

Tag: explainability

The Role of Human Knowledge in Explainable AI

We published a review article that aims to present a literature overview on collecting and employing human knowledge to improve and evaluate the understandability of machine learning models through human-in-the-loop approaches.

EXP-Crowd: Gamified Crowdsourcing for AI Explainability

The spread of AI and black-box machine learning models makes it necessary to explain their behavior. Consequently, the research field of Explainable AI was born. The main objective of an Explainable AI system is to be understood by a human as the final beneficiary of the model. In our research we just published on Frontiers … Continue reading EXP-Crowd: Gamified Crowdsourcing for AI Explainability